Making sure software works correctly is really important. A key tool that helps with this is a Test Management System (TMS). It's software that helps teams plan, run, track, and report on their software tests.

But testing modern software can be tricky. Teams face challenges like dealing with large amounts of test information, making sure tests match requirements, avoiding human mistakes, and meeting tight deadlines.

This is where Artificial Intelligence (AI) comes in. AI is becoming a powerful way to improve how a test management system works.

What is a Test Management System (TMS)?

A test management system (TMS) is a software tool that helps teams plan, run, and keep track of their software testing. It’s like a central hub where you can write test cases, assign them to team members, check their progress, and report any issues found.

Testing is a key part of building software—it makes sure everything works as it should before it reaches users. However, as software gets more complicated and teams need to release updates quickly, testing by hand can be slow and lead to mistakes. This is where artificial intelligence, or AI, steps in to make a big difference.

Why AI Matters in Modern TMS

Today, testing has to keep up with fast-moving projects and complex programs. Doing it all manually takes too much time and can miss problems, which might delay releases or upset users.

AI acts like a smart helper in a test management system. It can handle repetitive tasks, spot where bugs are likely to hide, and let testers focus on the most important parts of their work. This makes testing faster, more accurate, and less likely to fail, helping teams deliver better software right on schedule.

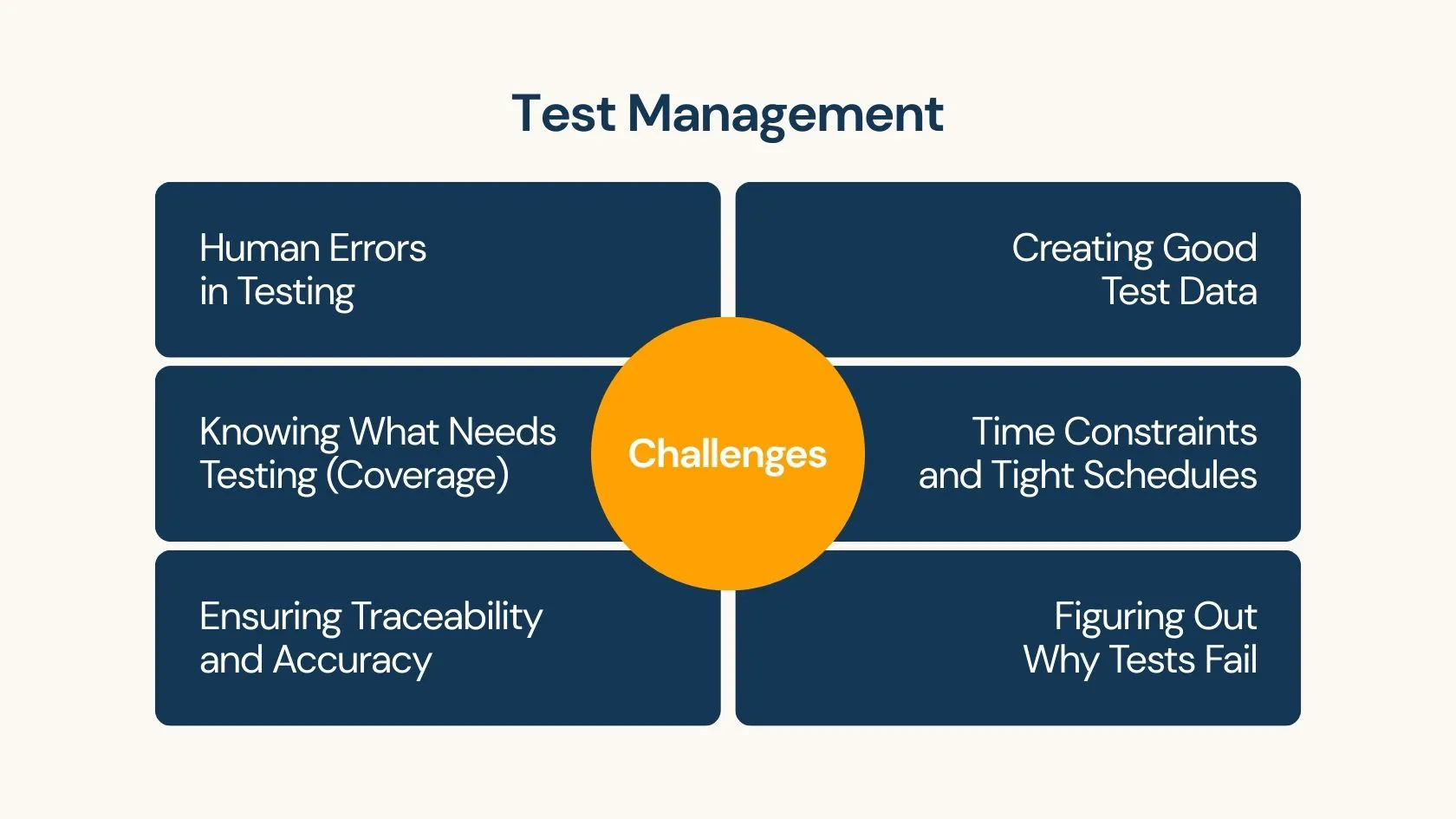

Common Challenges in Test Management

Testing software is rarely a straightforward task—teams encounter numerous obstacles that can complicate workflows and affect quality when using a test management system.

Challenge 1: Human Errors in Testing

Testers are human, so they sometimes make mistakes. They might skip a step, misread a requirement, or enter the wrong data when using the test management system, letting bugs slip through unnoticed.

These small errors can grow into bigger issues if not caught early, especially in complex projects where one mistake can affect many parts of the software.

Tiredness also plays a role. Long hours or repetitive tasks can wear testers down, making them more likely to overlook problems. While a test management system can help catch some errors, testing still relies on people’s attention to detail, which isn’t always perfect.

Challenge 2: Knowing What Needs Testing (Coverage)

It's hard to be sure if the tests cover all the important parts of the software without testing the same thing too many times. Gaps in coverage mean bugs can be missed, while redundant tests waste time and resources tracked in the test management system.

Without a clear picture of test coverage, teams might unknowingly leave critical functions untested, leading to unexpected bugs appearing after release. Alternatively, they might spend valuable time running too many similar tests for less important areas, wasting resources that could be better used elsewhere. Manually figuring out the right balance across large applications is a complex puzzle.

Challenge 3: Ensuring Traceability and Accuracy

Every test should link to a specific need in the software, like a feature or a fix. But when those needs change mid-project, it’s hard to keep the tests up to date.

If tests don’t match the latest requirements, key parts of the software might go untested, leaving room for bugs that could have been caught earlier.

This challenge is tricky, especially when multiple teams are involved. Miscommunication or poor documentation can break the links between tests and requirements, making it unclear if everything’s been covered. Tools can help track these connections, but only if everyone stays on top of updates.

Challenge 4: Creating Good Test Data

Making fake data for testing that feels real and covers all situations is hard and takes time. Sometimes the data isn't realistic enough, or sensitive information isn't protected properly when using copies of real data. Missing good test data means tests might not find certain bugs.

Using unrealistic data can lead to tests passing when they should fail (false positives) or failing incorrectly (false negatives), hiding real problems. If production data copies are used without proper masking, it creates serious security risks. Manually creating diverse data sets that cover all edge cases and variations is extremely time-consuming and often incomplete.

Challenge 5: Time Constraints and Tight Schedules

Deadlines in software development are often tight, and testing gets squeezed. Teams are forced to rush through tests, which can mean skipping steps or missing issues. This pressure to deliver quickly can leave bugs in the final product, causing problems for users down the line.

Sometimes, stakeholders don’t fully grasp the importance of thorough testing, pushing for faster releases instead. Testers then have to juggle doing a good job with meeting tight schedules, which is a tough balance to strike.

Challenge 6: Figuring Out Why Tests Fail

When an automated test fails, it can take a lot of digging through logs and code to understand the real reason why. This investigation slows down the process of fixing bugs.

This manual detective work not only delays bug fixes but also pulls developers away from building new features. It can become a significant bottleneck in the development cycle, especially in complex systems where a single failure could have multiple potential causes spread across different components.

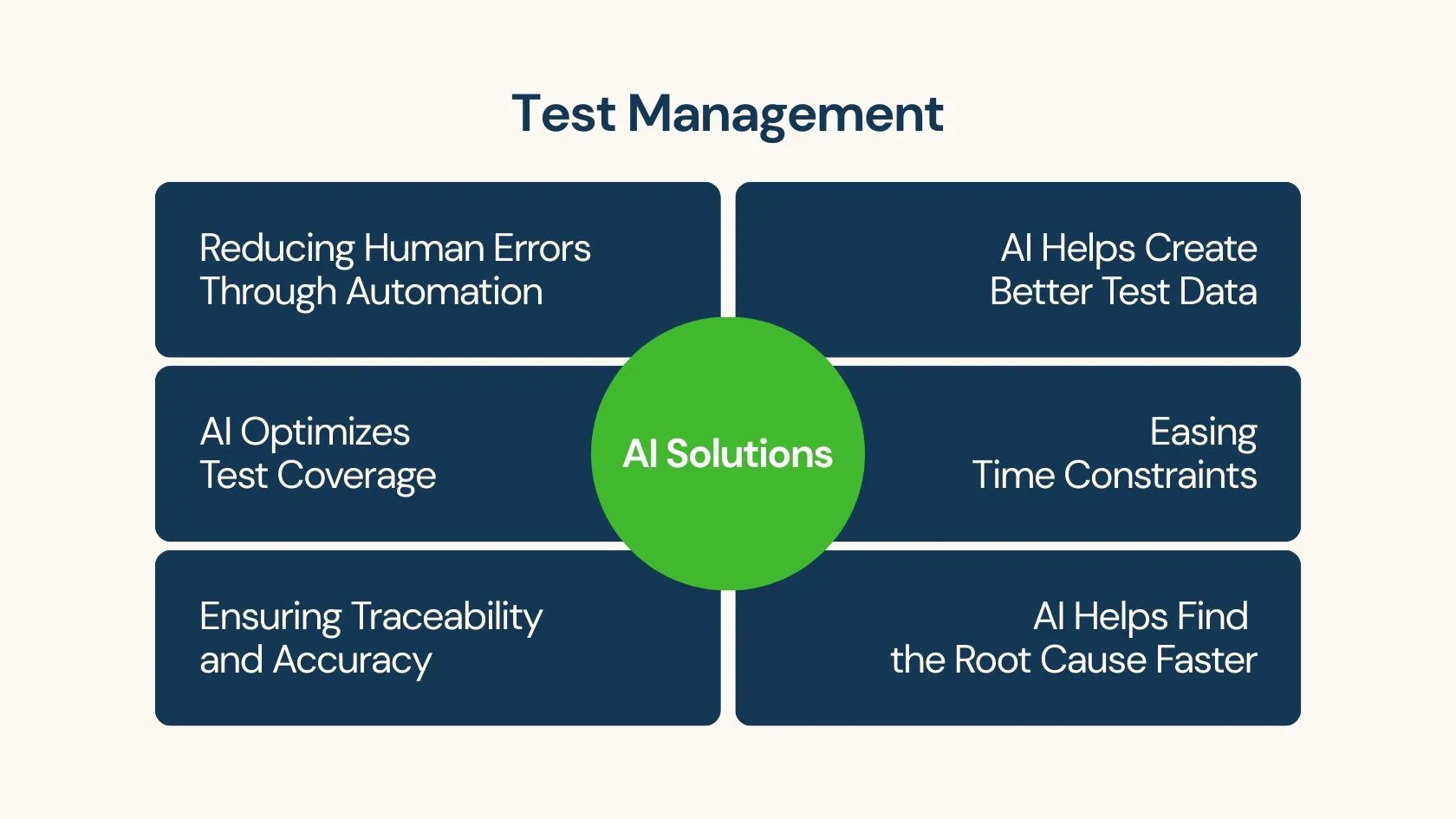

How AI is Solving These Challenges

Artificial Intelligence (AI) is transforming test management by tackling its toughest problems head-on.

Solution 1: Reducing Human Errors Through Automation

AI handles repetitive tasks that often burden users of a test management system, tasks that often lead to mistakes. It runs routine checks with pinpoint accuracy, so testers don’t have to.

When people get tired or distracted, errors creep in, but AI stays sharp no matter how long it works. By taking over boring jobs like logging results into the test management system, it frees testers to focus on creative work where their skills shine.

This isn’t about replacing humans—it’s about giving them a reliable partner. For beginners, think of AI as a teammate who never forgets a step, while pros see it as automation that boosts quality without adding stress.

Solution 2: AI Optimizes Test Coverage

AI can look at the software requirements, code changes, and the existing tests stored in the test management system to find gaps or overlaps. It suggests which tests to add or remove to get the best coverage efficiently.

By analyzing factors like code changes, requirement complexity, and historical defect data, AI can pinpoint high-risk areas needing more attention. This allows teams to focus their testing efforts where they matter most, increasing the chances of finding critical bugs and building confidence in the software's quality, all tracked within the test management system.

Solution 3: Ensuring Traceability and Accuracy

AI keeps tests tightly linked to software requirements. It updates connections automatically when things change.

Mid-project shifts can break traceability, leaving gaps that let bugs slip through, but AI tracks every detail in real-time. This ensures no feature goes untested, even when deadlines loom or requirements evolve fast.

Solution 4: AI Helps Create Better Test Data

AI can automatically generate realistic and varied test data based on requirements or patterns it learns (while keeping private info safe). It can create data for tricky edge cases testers might miss. This saves time and ensures tests cover more possibilities effectively within the test management system.

AI tools integrated with the test management system can generate specific data types needed for thorough testing, such as boundary values, invalid inputs for negative testing, or large volumes of production-like data with sensitive information automatically masked. This ensures tests are more robust, cover a wider range of scenarios, and comply with data privacy regulations.

Solution 5: Easing Time Constraints and Tight Schedules

AI speeds up testing to fit tight deadlines. It runs tests quickly without missing a beat.

When time’s short, teams might skip steps, but AI prioritizes the most critical tests to cover key features first. This means quality stays high even when the clock’s ticking, delivering software that works without delays.

Solution 6: AI Helps Find the Root Cause Faster

AI can analyze test failure logs, application logs, and recent code changes together, often directly within or integrated with the test management system. It can quickly point developers to the likely source of the problem by identifying patterns associated with the failure, speeding up debugging significantly.

These AI systems correlate information from various sources like application performance metrics, detailed logs, and version control history, often accessible through the test management system. By quickly highlighting the most likely cause or the exact code change that introduced the issue, AI dramatically reduces debugging time and helps teams learn from past failure patterns.

How AI Is Being Used in Test Management Today

AI is changing the game in test management, turning tough challenges into manageable tasks.

AgileTest: Test Management & AI Generator for Jira

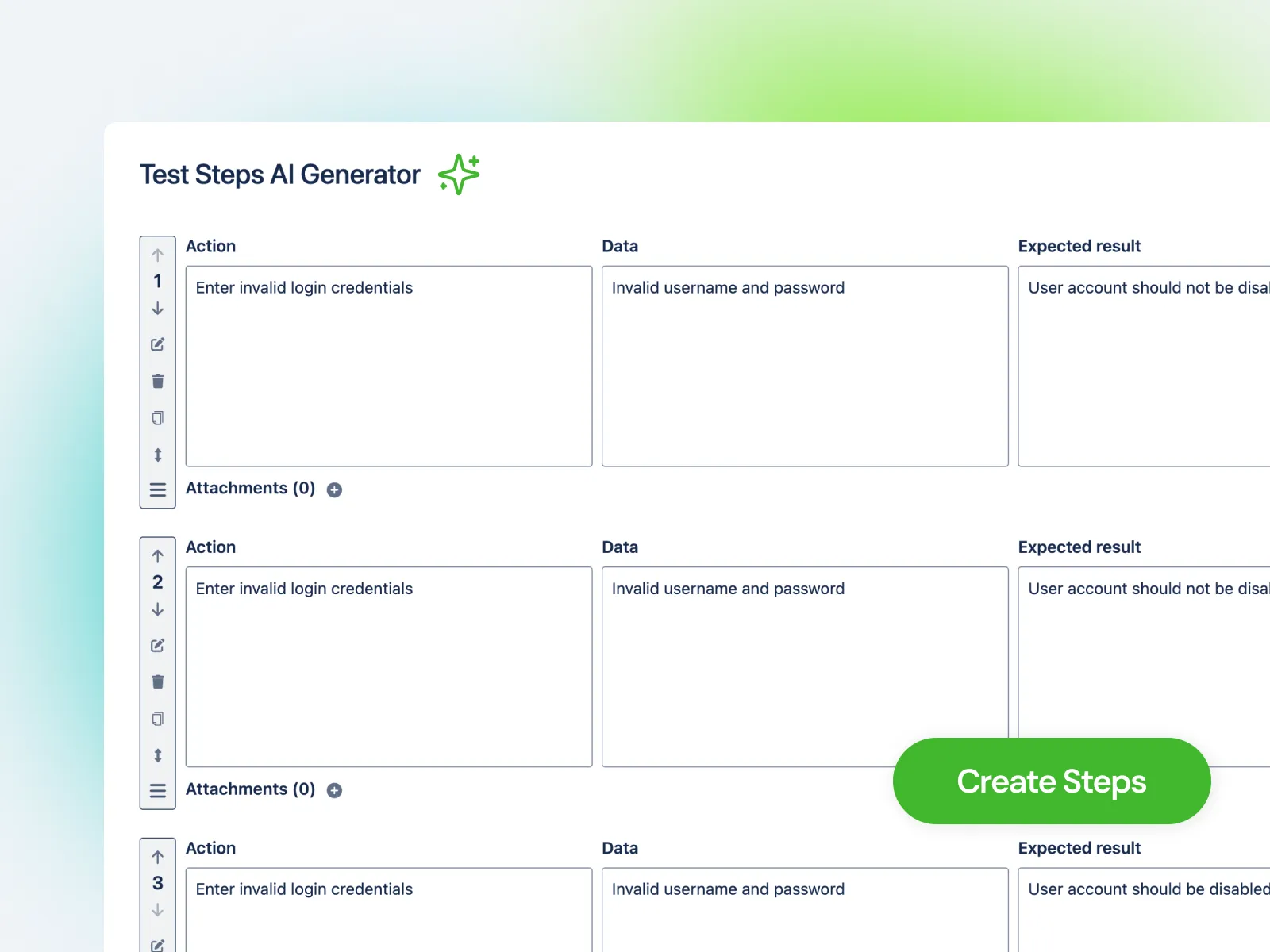

AgileTest utilizes AI within its Jira test management app primarily through its AI Test Generator. This feature directly addresses the time-consuming task of writing test cases.

By analyzing requirements or user input, the AI automatically suggests relevant test cases and detailed steps, which can then be reviewed and added to the test plan within the test management system.

This application of AI aims to accelerate the test design phase, improve consistency in test step definition, and allow QA teams to focus more on test execution and analysis rather than manual test writing.

Testingy's AI Test Generation

Testingy integrates AI to accelerate test creation. Its AI Generator analyzes the descriptions of requirements stored in issues. Based on this analysis, it automatically suggests relevant test cases and detailed test steps needed to verify that requirement.

Teams can review the AI-generated tests, edit them as needed (modifying summaries or descriptions), and then select which ones to formally create and link back to the requirement. This feature aims to save time in the test design phase and ensure requirements have corresponding test coverage.

Testim’s AI-Based Stability

Testim leverages AI to help teams create stable automated tests more quickly. Its AI can assist in capturing user actions during test recording, even for complex scenarios. A key capability highlighted is its self-healing feature, where the AI adapts test scripts automatically when it detects changes in the application's code or UI, reducing test maintenance efforts.

The system aims to make tests more resilient to minor application updates, allowing QA teams to focus on testing new functionality rather than constantly fixing broken scripts. Reliable tests help ensure smoother releases.

Sauce Labs’ Scalable Cloud Testing

Sauce Labs provides a cloud-based platform for large-scale automated testing across a vast array of browsers, operating systems, and real devices. This ensures applications function correctly for users on different setups, from mobile devices to desktops.

While primarily known for its scale, Sauce Labs integrates with various tools and testing frameworks. Its platform allows teams to run many tests in parallel, identifying platform-specific bugs efficiently, which is crucial for apps targeting diverse user environments.

Future Trends in AI for Test Management

AI is already making test management smarter, but the best is yet to come. Here are five trends that will shape the future, pushing testing to new levels of speed and accuracy.

Smarter AI Models for Defect Prediction

AI will get better at spotting bugs before they happen. It’ll dig deeper into data to find hidden risks.

Future models will learn from millions of past tests, catching patterns no human could see. For a banking app, this could mean flagging a rare crash in payment code early. Teams will fix issues faster, saving headaches down the line.

These smarter systems will also adapt to new software types, like AI-driven apps themselves. A gaming company could use this to predict glitches in virtual reality features. Testing will feel like it has a sixth sense.

Seamless Integration with CI/CD Pipelines

AI will blend smoothly into nonstop development workflows. It’ll test code as soon as it’s written.

In CI/CD setups, AI will run checks instantly, catching bugs during every update. For a news app, this ensures new features, like live alerts, work perfectly before hitting users. Releases will stay fast without sacrificing quality.

This integration will also balance speed and coverage. A retail site could push daily updates while AI verifies checkout flows. Testing will keep pace with even the quickest projects.

Better Test Environment Management

AI will simplify setting up test environments. It’ll pick the right setups automatically.

Managing virtual devices or cloud platforms is tricky, but AI will configure them to match real-world use. For a fitness app, this means testing on phones and watches without manual tweaks. Teams will save hours getting started.

AI will also spot when environments drift from reality, like an outdated browser version. A streaming service could avoid bugs from mismatched setups. Testing will be smoother and more reliable.

Enhanced Human-AI Collaboration

AI will work closer with testers, not replace them. It’ll suggest ideas while humans make big calls.

Future tools will highlight risky areas and let testers decide how to tackle them. For a travel app, AI might flag booking errors, but testers choose the best fix. This teamwork blends machine speed with human smarts.

The collaboration will feel natural, like a trusted coworker. In a healthcare project, AI could crunch data while testers focus on patient privacy rules. Testing will get the best of both worlds.

Greater Use of Natural Language Processing (NLP)

AI will let teams write tests in plain English. It’ll turn words into detailed test cases.

With NLP, anyone can describe a feature—like a login page—and AI will build the tests. For a social media app, saying “check if logout works” could trigger full checks. This makes testing easier for non-tech folks.

The AI will also refine vague instructions into precise steps. A retail team could say “test payment options,” and AI would cover cards and wallets. Testing will open up to everyone on the team.

Conclusion

Artificial Intelligence is changing software testing, especially when used with a test management system. AI helps solve common testing problems by automating routine work, finding bugs more accurately, keeping tests linked to requirements, and speeding up the testing process.

AI tools are already making a difference by making teams more efficient and reducing mistakes. As AI gets better, it will work even more closely with test management systems to make testing smarter and faster. Using AI in a test management system isn't just helpful anymore—it's becoming necessary to build great software quickly and reliably.