What stands between a flawless app and a buggy disaster? Normally, it’s the humble test case—a small but mighty tool in software development. Testing is the heartbeat of quality assurance, ensuring that apps, websites, and systems work as promised.

At its core, a test case is a structured way to check if something does what it’s supposed to do. Whether you’re a developer, a tester, or just curious about how software gets polished, understanding test cases is key.

In this guide, we’ll explore the question, what is a test case? Why does it matter? How is it built? We also uncover the challenges it brings and how to manage it effectively.

What is a test case exactly?

A test case is a documented set of conditions, steps, inputs, and expected outcomes designed to verify a specific aspect of a system. You can think of it as a recipe, like following precise instructions to see if the dish, your software, turns out right.

What is a test case?

Its job? To validate that everything from a login button to a database query works as intended, whether that’s about functionality, performance, or meeting user requirements.

Moreover, test cases have a few defining traits: they’re specific (no guesswork), measurable (clear pass or fail), and repeatable (same result every time). They’re also tied to what the software should do, often rooted in requirements or user stories.

Don’t confuse them with a test script—that usually relates to automated testing—while a test case can be manual or automated. And unlike a test plan, which is the big-picture strategy, a test case is a tactical and hands-on tool.

Why are test cases important?

Why bother with test cases? Simple: they’re the guardians of quality. They ensure that the software meets its design and functional goals, catching glitches before users do.

Imagine a payment system that fails mid-transaction because no one tested it with a declined card—test cases prevent those nightmares by spotting issues early, saving time and money down the line.

They’re also a communication lifeline, helping developers, testers, and stakeholders speak the same language. With a test case, everyone knows what’s been checked and how. Plus, they leave a paper trail—proof of what worked (or didn’t).

Take a real-world example: in 2012, a trading software glitch cost Knight Capital $440 million in 30 minutes, partly due to untested code. Test cases build trust, ensuring users get a product they can rely on.

Example of a test case format

Test cases aren’t random—they follow a structured format for clarity and consistency. Here’s a sample presented in a table to illustrate how they’re typically laid out:

| Fields | Details |

|---|---|

| Test Case ID | TC001 |

| Title | Verify user can reset password with valid email |

| Description | User has an account; email is registered |

| Steps |

|

| Expected results | The system sends a reset link to the email |

| Actual results | (Filled later, e.g., "Link sent successfully.") |

| Status | Pass/Fail |

Each field serves a purpose: the ID tracks it uniquely, the precondition sets the stage, the steps guide the tester, and the results confirm success or failure.

Formats can vary; some teams might add fields like priority or test data, but the goal remains a clear, repeatable process. This example tests a password reset, but it could easily adapt to check a checkout flow or a search feature.

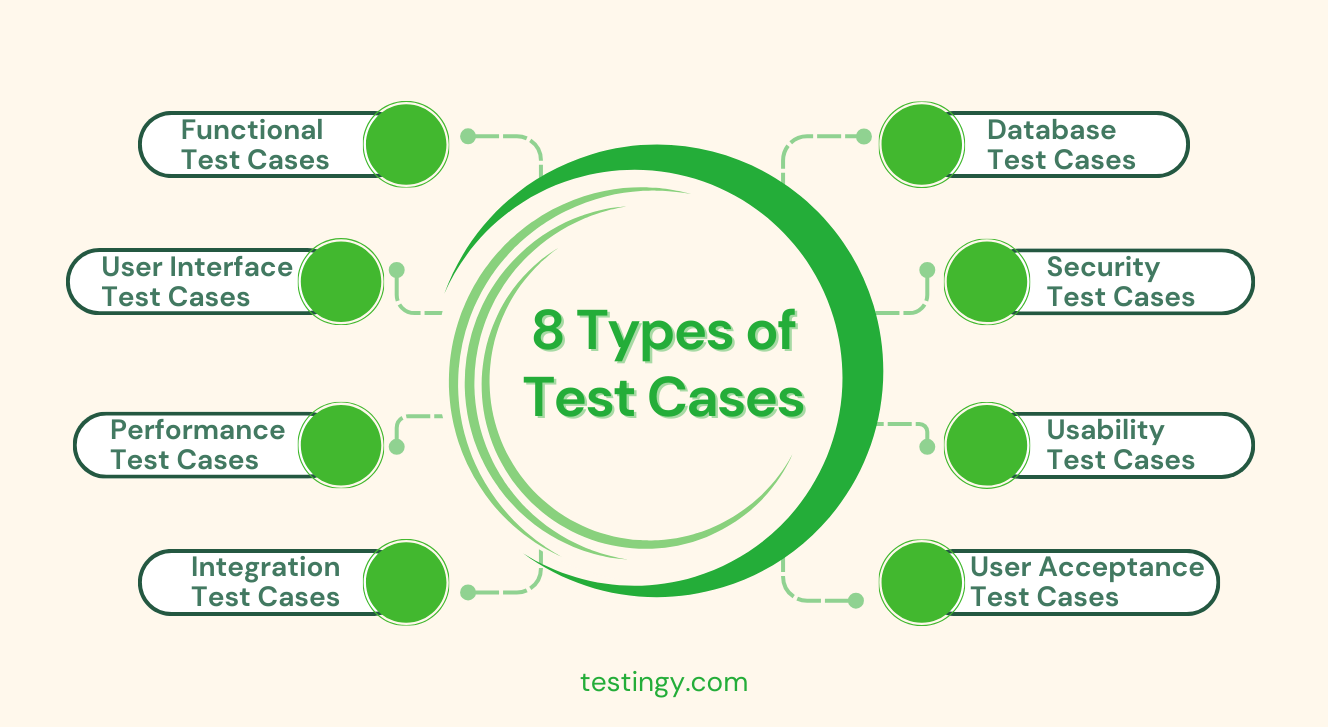

Types of test cases

Test cases differ based on what they target, ensuring all aspects of a system are validated.

Here’s a list of eight key types with brief descriptions:

- Functional Test Cases: Test specific features to ensure they work as designed (e.g., "Does the login button log you in?").

- User Interface Test Cases: Check the look and feel of visual elements like buttons and menus (e.g., "Is the error message clear when a form is blank?").

- Performance Test Cases: Measure speed and stability under load (e.g., "Can the app handle 1,000 users at once?").

- Integration Test Cases: Verify that different system parts work together (e.g., "Does the UI talk to the payment system correctly?").

- Database Test Cases: Confirm data is stored and retrieved accurately (e.g., "Does a new user entry save properly?").

- Security Test Cases: Ensure protection against threats and breaches (e.g., "Can the login block a hacking attempt?").

- Usability Test Cases: Assess how easy the software is to use (e.g., "Can users find settings quickly?").

- User Acceptance Test Cases: Validate that the system meets user needs before launch (e.g., "Does buying an item feel smooth for customers?").

These types often overlap—a functional test might check UI too—but each zeroes in on a unique quality goal. Together, they form a safety net for the software.

Challenges of test cases

Test cases sound great, but they’re not without challenges. They’re essential for quality, yet the process of creating, executing, and maintaining them brings significant challenges that can test a team’s patience and skill.

Below, we outline the key obstacles, each a hurdle worth understanding, and why they complicate testing.

1. Time-consuming

Writing test cases takes time and can slow down development sprints. Crafting detailed steps—like testing a login feature for valid credentials, invalid inputs, and edge cases—demands effort, especially when requirements are unclear.

As a result, testers might spend hours figuring out what “works as expected” means, delaying progress and forcing teams to weigh thorough testing against tight deadlines.

2. Vague goals

Vague requirements lead to vague test cases, undermining their purpose. If a spec says “handle errors gracefully” without specifics, testers guess what to check—maybe an error message appears, but they miss if it’s helpful to users.

Such ambiguity creates tests that fail to catch critical issues, surfacing only when users run into problems.

3. Rigid tests

Over-specific test cases become rigid and fragile as software changes. A test expecting a “Submit” button with a blue background fails if it’s renamed “Confirm” in green, even if the function’s fine. This brittleness requires constant rewrites, draining time and making it hard to adapt to a shifting codebase.

4. Environmental issues

Running tests can falter when the test environment doesn’t match production. A test might pass in a clean dev setup but fail in the real world with spotty networks or messy data.

For example, a database test works with a small set but crashes with millions of records. These mismatches waste time as teams diagnose whether the issue is in the code or the setup.

5. Unreliable tests

Then there are flaky tests—passing one day, failing the next—erodes confidence. A UI test might fail if a page loads slowly due to network issues, or an integration test flops when an external API is down. Consequently, this inconsistency leaves teams questioning if a failure signals a bug or just a quirk, adding effort to pinpoint the cause.

These challenges don’t kill the value of test cases—they just underscore the need for sharp skills, robust tools, and disciplined processes to keep them effective.

How to manage test cases effectively

So, how do you tame the test case beast? Start with strategy. You should prioritize high-risk areas, like testing the payment system before tweaking a font size.

Additionally, it is recommended to make test cases modular with reusable steps for common tasks to save time. You can also use version control to keep them in sync with code updates.

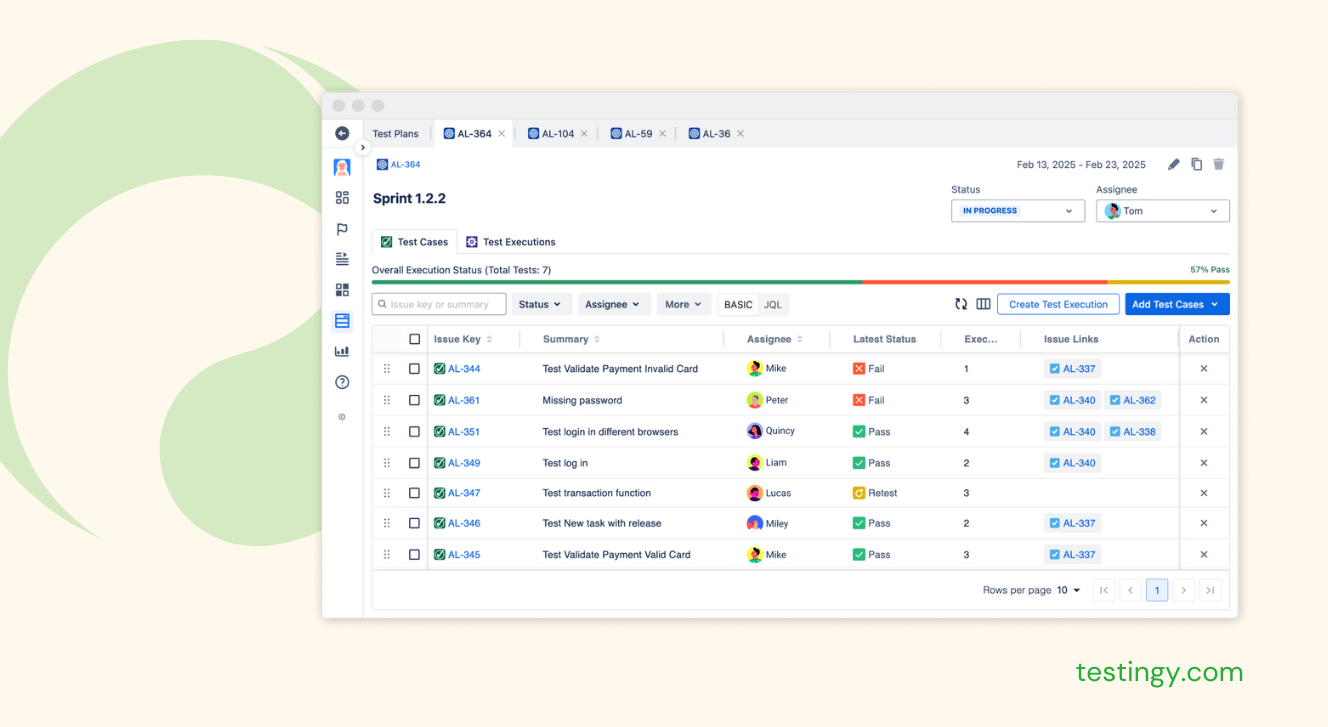

Tools matter. Small teams can use Excel, but Testingy excels for bigger projects. It empowers testers to write detailed cases with detailed steps, preconditions, and even screenshots of their test.

Last but not least, don’t forget to track metrics like pass/fail rates or test coverage to spot weak spots. It’s not just about writing tests; it’s about keeping them useful and lean.

Benefits of writing high-quality test cases

Investing in high-quality test cases that are clear, precise, and well-structured pays off big. Here’s why they’re worth the effort:

- Fewer bugs: Thorough, well-written cases catch issues early, reducing defects in production.

- Faster debugging: Clear steps and expected results pinpoint exactly where things go wrong, speeding up fixes.

- Better coverage: Thoughtful cases test edge cases and critical paths, leaving no stone unturned.

- Improved collaboration: Precise language ensures that testers, devs, and stakeholders understand what’s being validated.

- Higher reusability: Well-crafted cases can be reused across projects or versions, saving time.

- Easier automation: Structured cases translate smoothly into automated scripts, boosting efficiency.

- Enhanced test case management: High-quality cases are easier to organize, update, and track, reducing clutter.

- Increased confidence: Reliable tests prove the software works, reassuring teams and clients alike.

By writing top-notch test cases, you can also streamline management. They’re less likely to become obsolete quickly, simpler to prioritize, and more compatible with version control, keeping your testing process smooth and scalable.

Final thoughts

So again, what is a test case? It is more than checklists—they’re structured tools that uphold quality, catch bugs, and prove a system works. From functional checks to usability tweaks, their diverse types tackle every angle, despite challenges like time, maintenance, and flaky results. Managed well, with high-quality writing, they’re a developer’s best friend.

In today’s rush-to-release world, test cases let teams move fast without breaking things, building confidence in every update. Want to see their power? Try writing one for a simple feature, or dive into a tool like Testingy. As software grows trickier, test cases remain a bedrock of trust. After all, they don’t just find bugs—they build trust in every line of code.